|

I am VP of Technology (AI) at Vektor Medical where I work on the 🫀. Previously, I was a research scientist at the Icahn School of Medicine at Mount Sinai, and the National Institute of Mental Health. I completed my Masters and PhD in Bioengineering across Imperial College London and NIMH/NIH, where I was advised by Simon Schultz & Bruno B. Averbeck and funded by the Wellcome Trust. I received my Bachelors in Computational Neuroscience from Princeton University where I also completed the pre-medical track. During that time I worked with Uri Hasson at the Princeton Neuroscience Institute, and also spent some time at the MPI for Brain Research in Frankfurt, Germany. I also enjoy thinking about deep tech ventures in biology and healthcare. During my PhD, I have also spent time working with (bio)tech startups (Herophilus, Startupbootcamp London), and in venture capital (Atomico, Panacea Innovation). Email / Linkedin / Google Scholar / Git / Twitter / Goodreads / Substack |

|

|

I am passionate about computational neuroscience and machine learning, and computational biology more broadly. I am interested in how information is stored, extended and retrieved in neural networks in the brain. I am also interested in modeling network dysfunction, and restoring healthy functioning by correcting network imbalances. In my work I use computational modeling together with tools from across machine learning, information engineering, signal processing and statistics. I enjoy working across disciplines. |

|

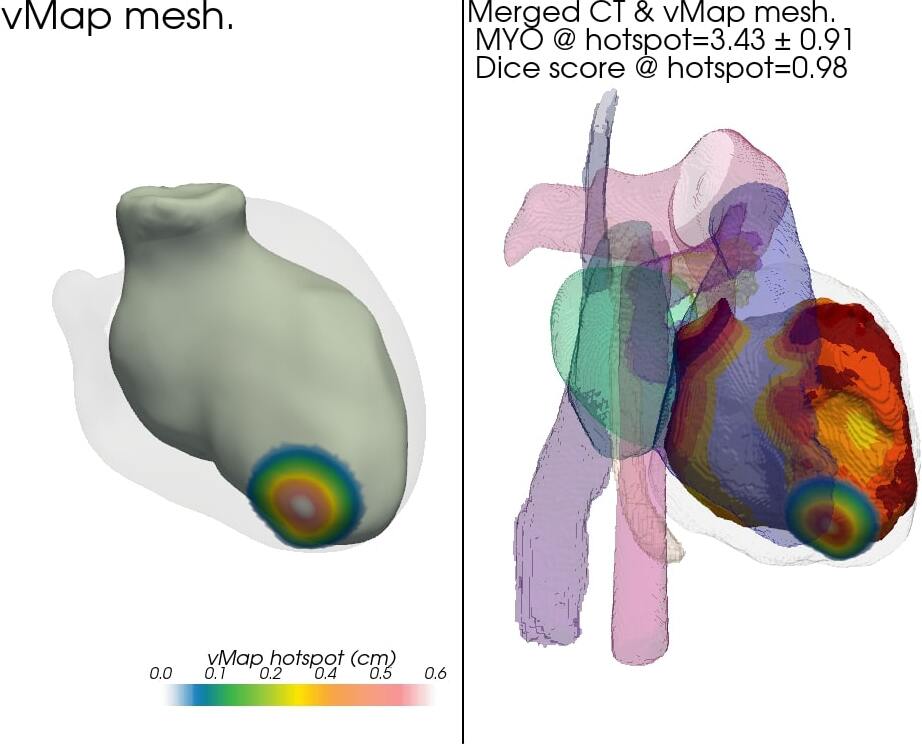

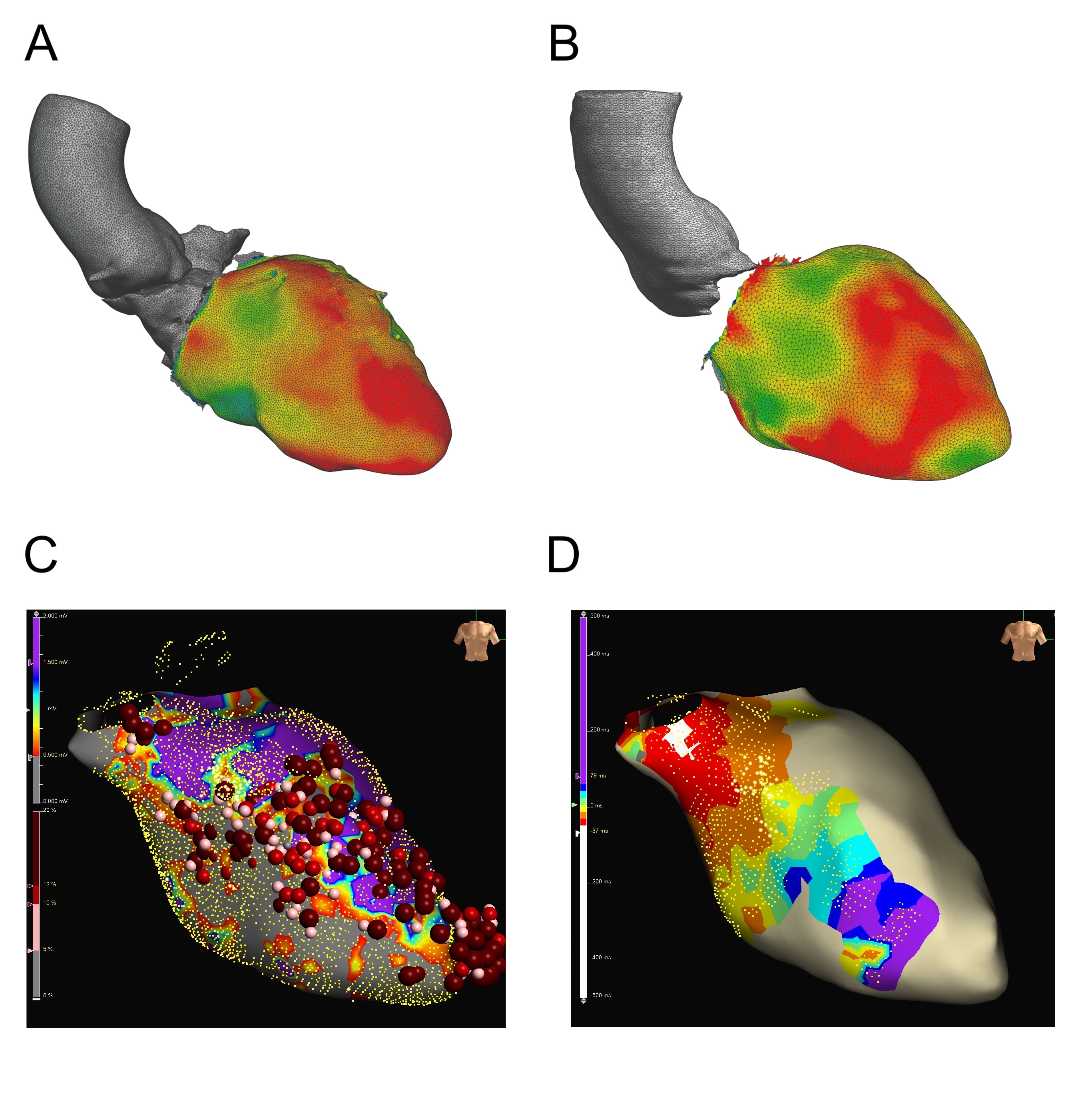

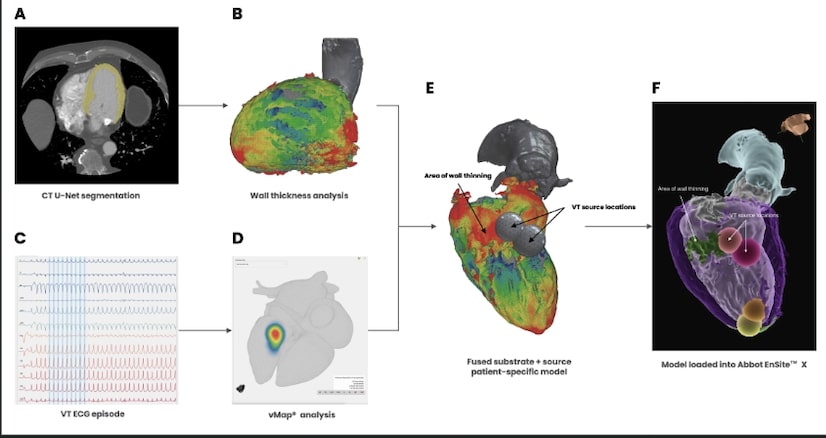

G Ho, CT Villongco, FT Han, JC Hsu, K Hoffmayer, F Raissi, GK Feld, DE Krummen, CD Márton HRS, 2024 |

|

JJ Bussell, RP Badman, CD Márton, ES Bromberg-Martin, LF Abbott, K Rajan, R Axel bioRxiv, 2023 Animals are motivated to acquire knowledge of their world. They seek information that does not influence reward outcomes suggesting that information has intrinsic value. We have asked whether mice value information and whether a representation of information value can be detected in mouse orbitofrontal cortex (OFC). |

|

CD Márton, C Villongco, DE Krummen, G Ho, HRX, 2023 |

|

C Villongco, CD Márton, T Moyeda, DE Krummen, G Ho, HRX, 2023 |

|

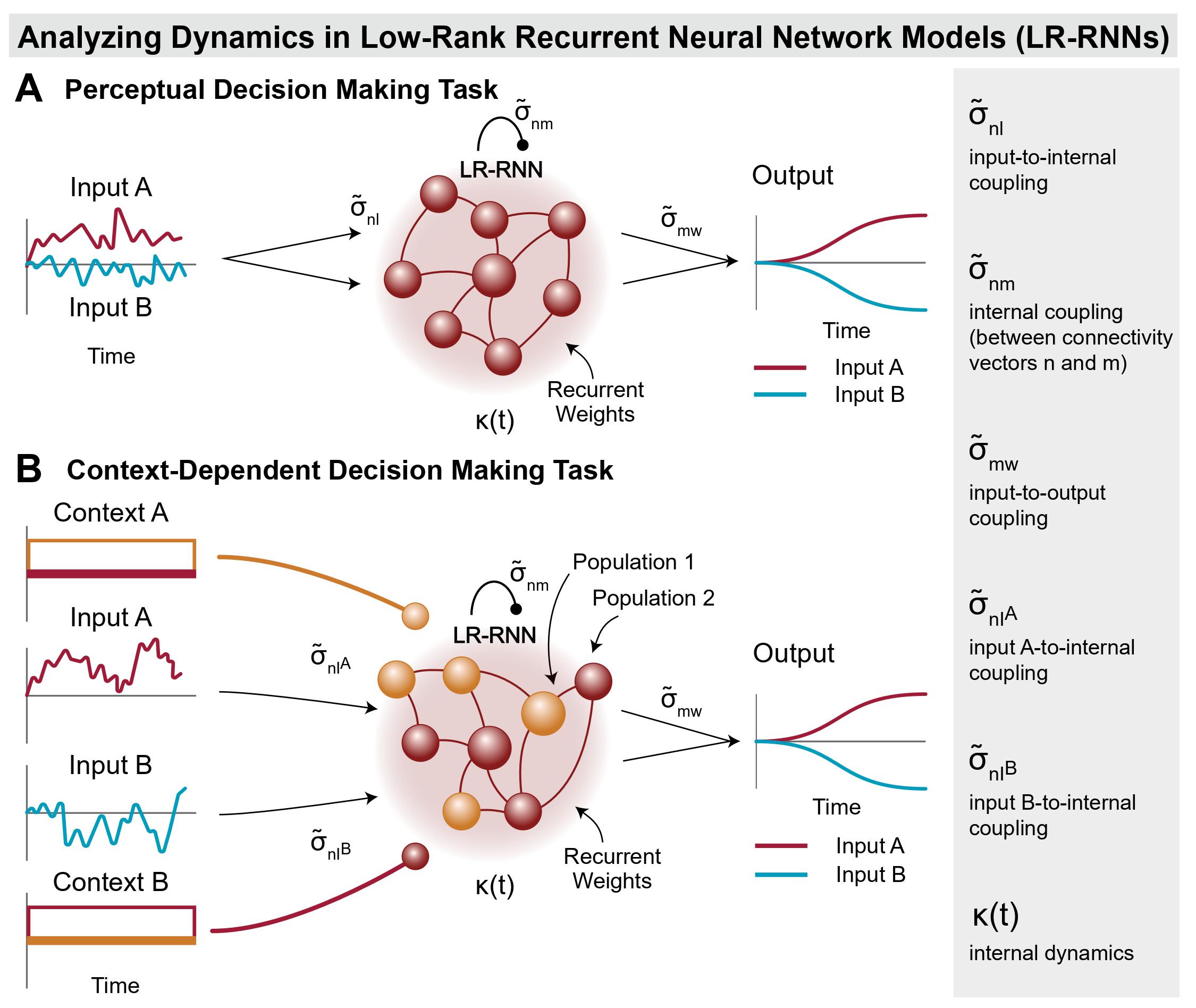

CD Márton, S Zhou, K Rajan Nature Neuroscience, 2022 The solutions found by neural networks to solve a task are often inscrutable. We have little insight into why a particular structure emerges in a network. By reverse engineering neural networks from dynamical principles, Dubreuil, Valente et al. show how neural population structure enables computational flexibility. |

|

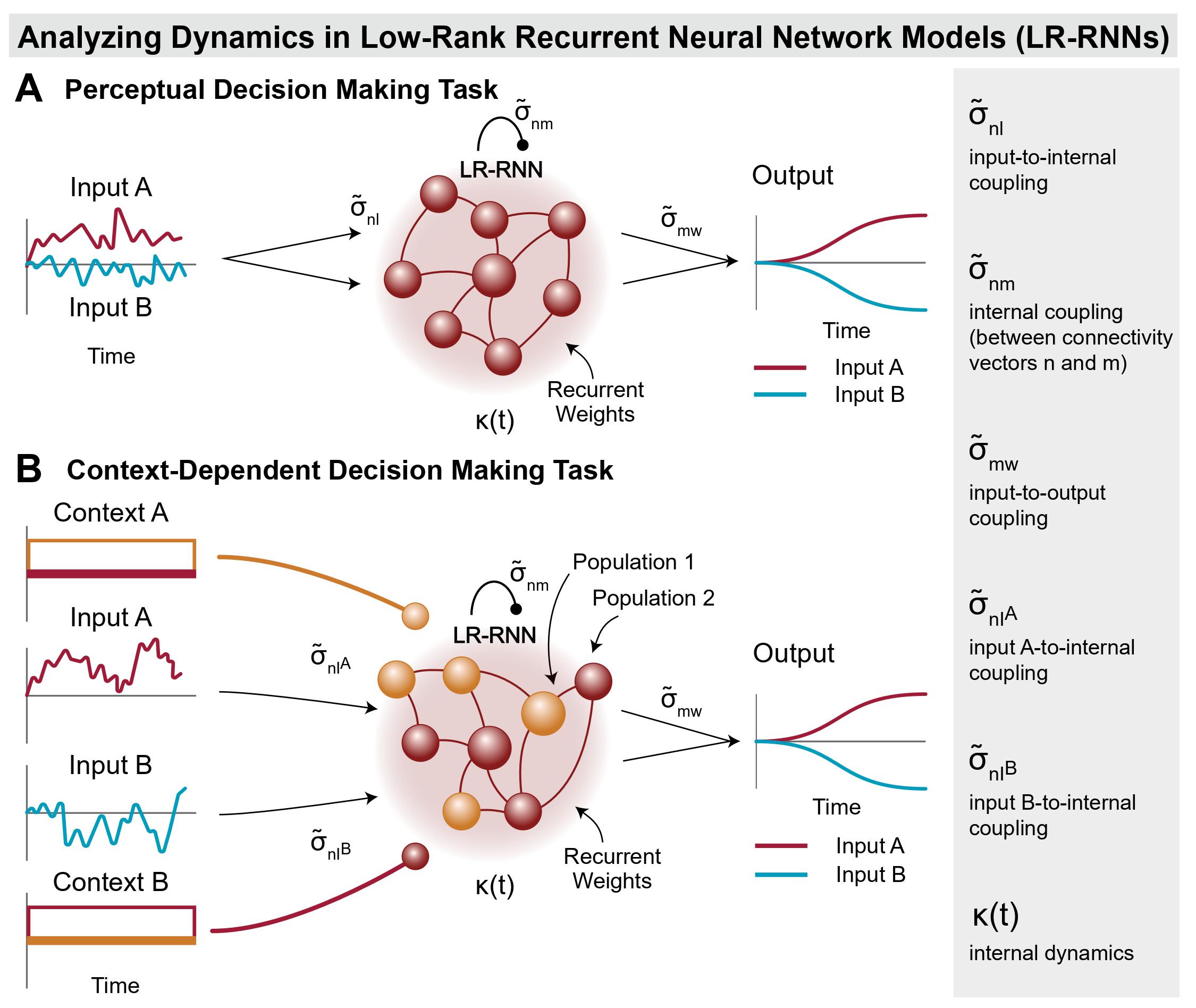

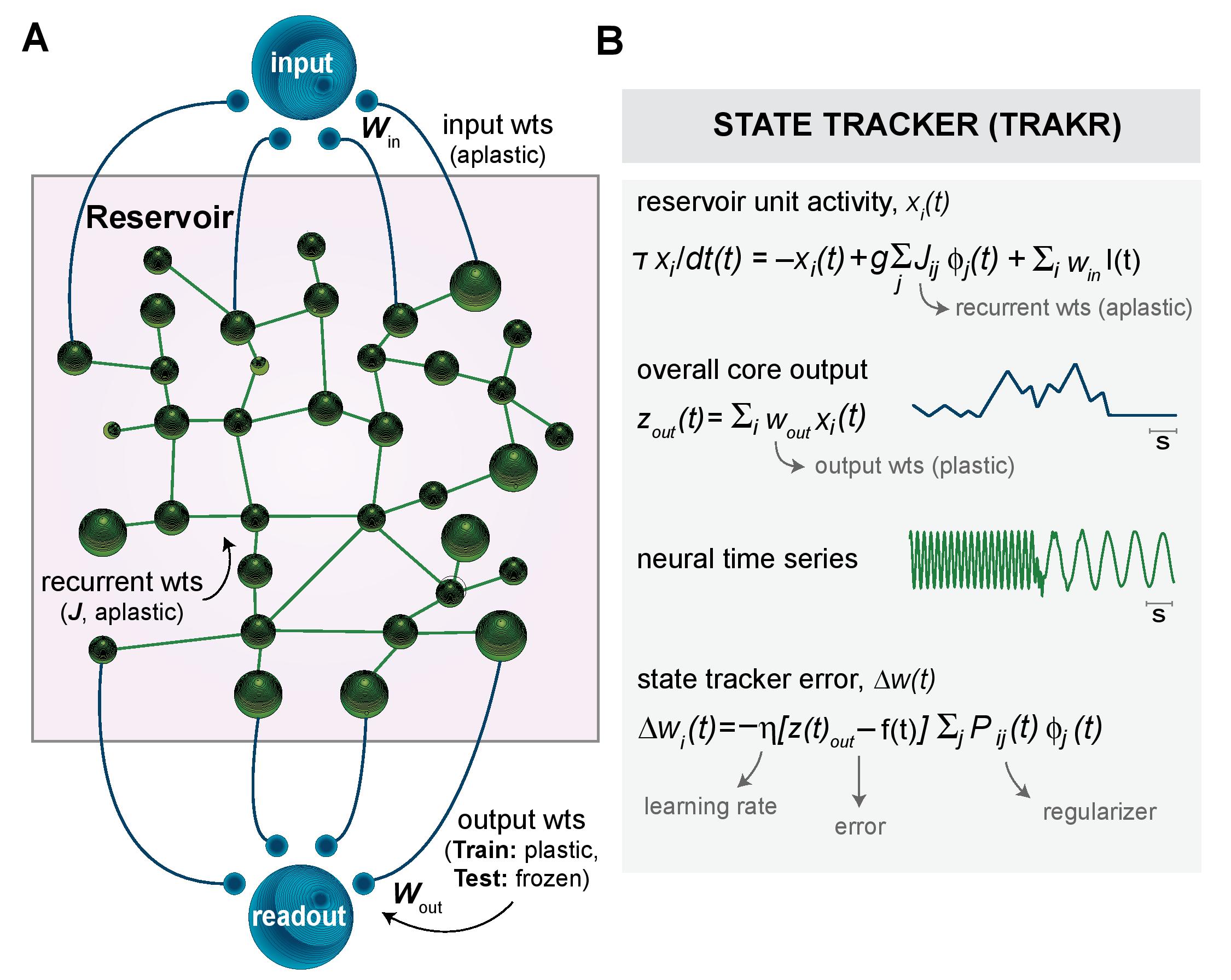

F Afzal*, CD Márton*, K Rajan bioRxiv, 2021 * Contributed equally. It remains challenging to correctly distinguish nonlinear time-series patterns because of the high intrinsic dimensionality of such data. We introduce a reservoir-based tool, state tracker (TRAKR), which provides the high accuracy of ensembles or deep supervised methods while preserving the benefits of simple distance metrics in being applicable to single examples of training data (one-shot classification). |

|

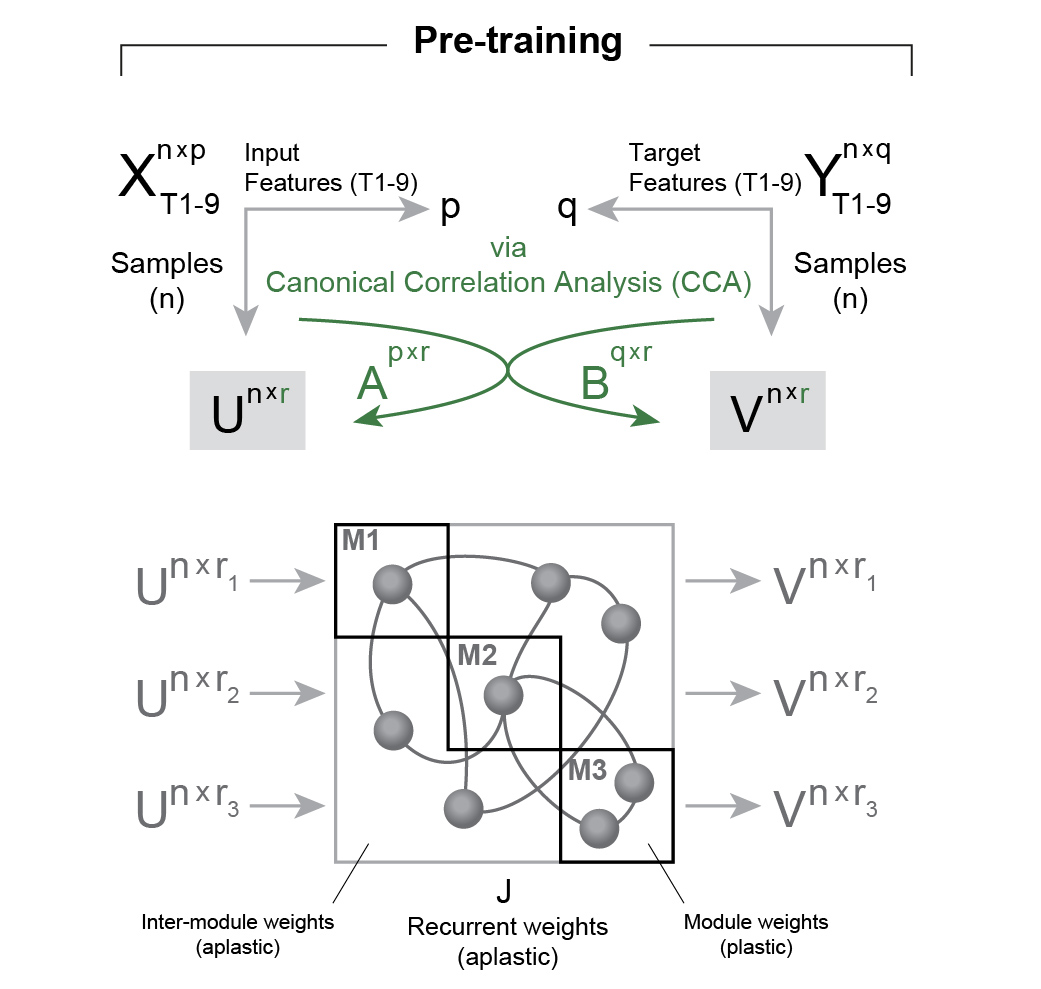

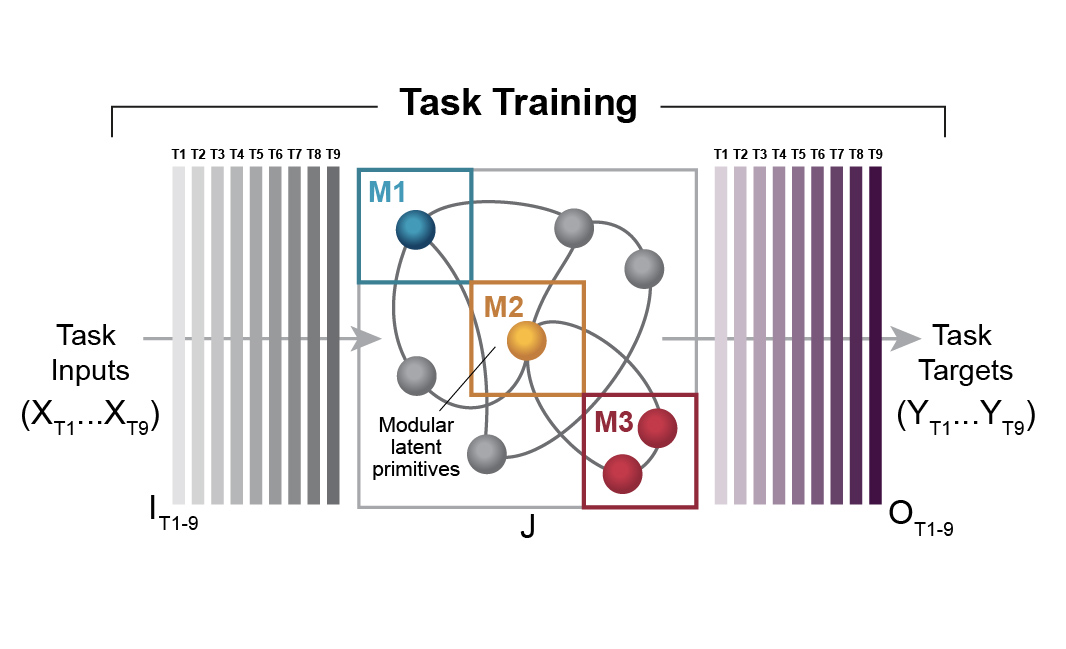

CD Márton, L Gagnon, G Lajoie, K Rajan arXiv, 2021 Combining brain-inspired inductive biases we call functional and structural, we propose a system that learns new tasks by building on top of pre-trained latent dynamics organised into separate recurrent modules. The resulting model, we call a Modular Latent Primitives (MoLaP) network, allows for learning multiple tasks effectively while keeping parameter counts, and updates, low. We also show that the skills acquired with our approach are more robust to a broad range of perturbations compared to those acquired with other multi-task learning strategies, and that generalisation to new tasks is facilitated. |

|

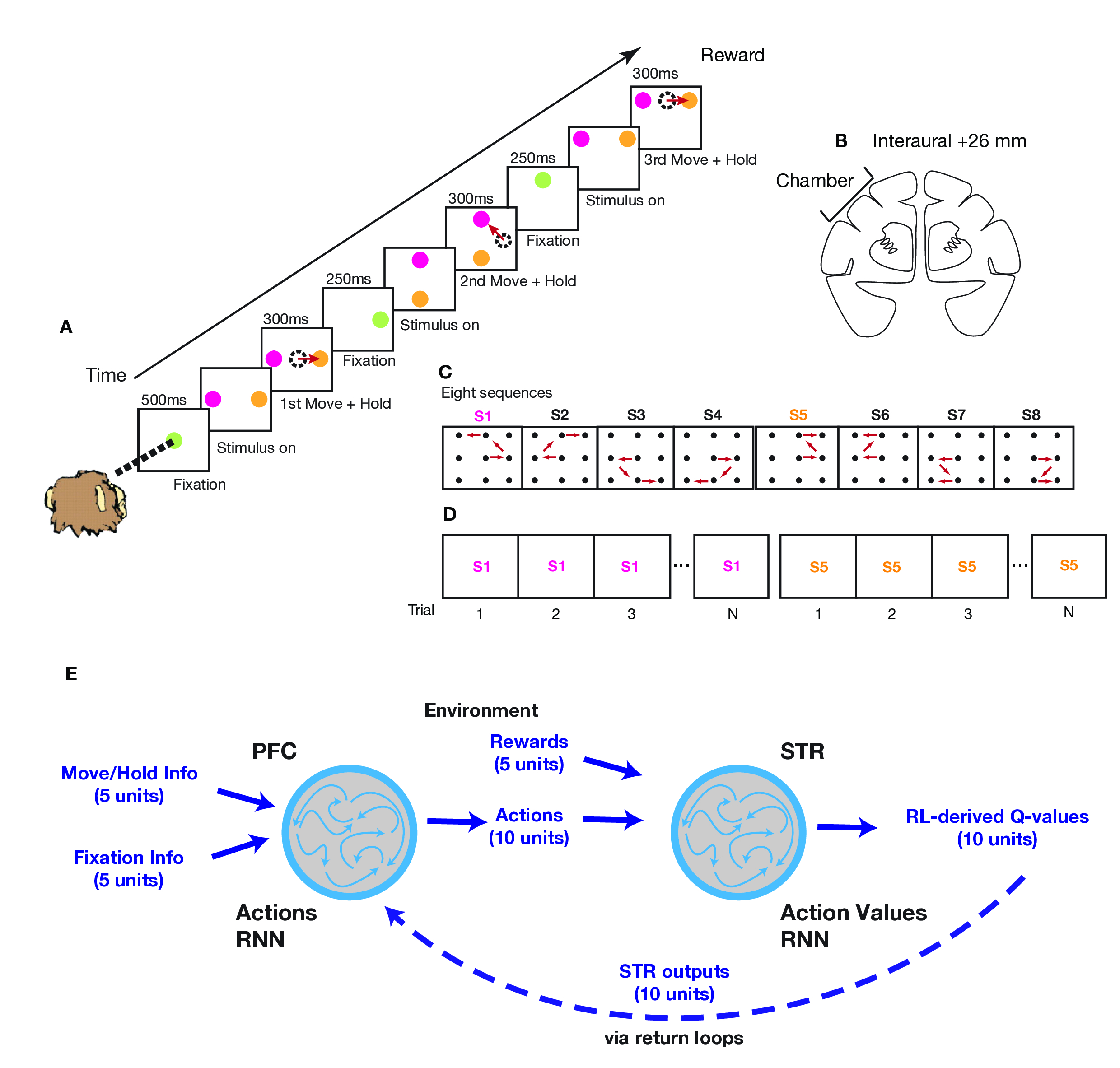

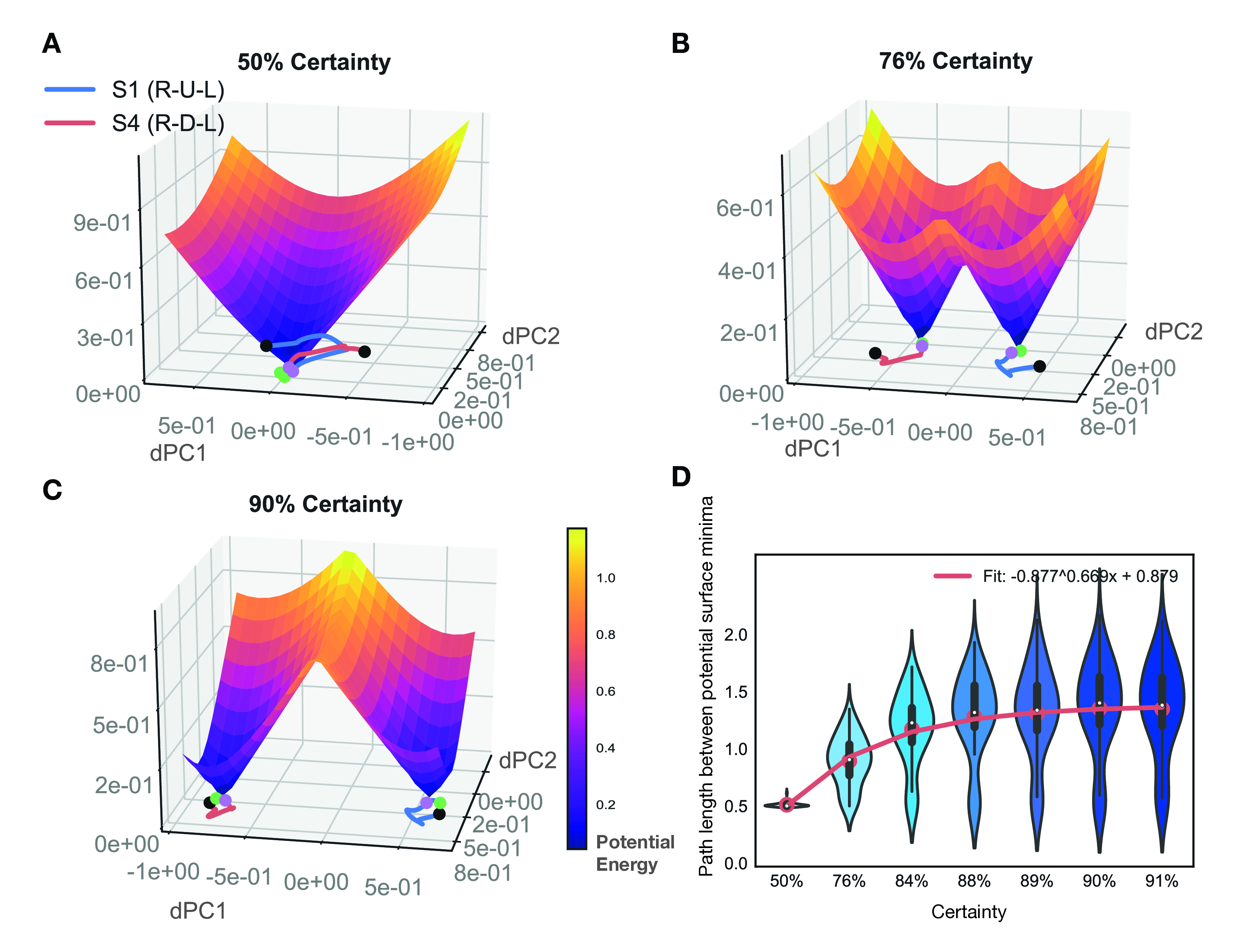

CD Márton, SR Schultz, BB Averbeck Neural Networks, 2020 / bioRxiv Learning to select appropriate actions based on their values is fundamental to adaptive behavior. This form of learning is supported by fronto-striatal systems. The computational mechanisms that shape the neurophysiological responses, however, are not clear. To examine this, we developed a recurrent neural network (RNN) model of the dlPFC-dSTR circuit and trained it on an oculomotor sequence learning task. |

|

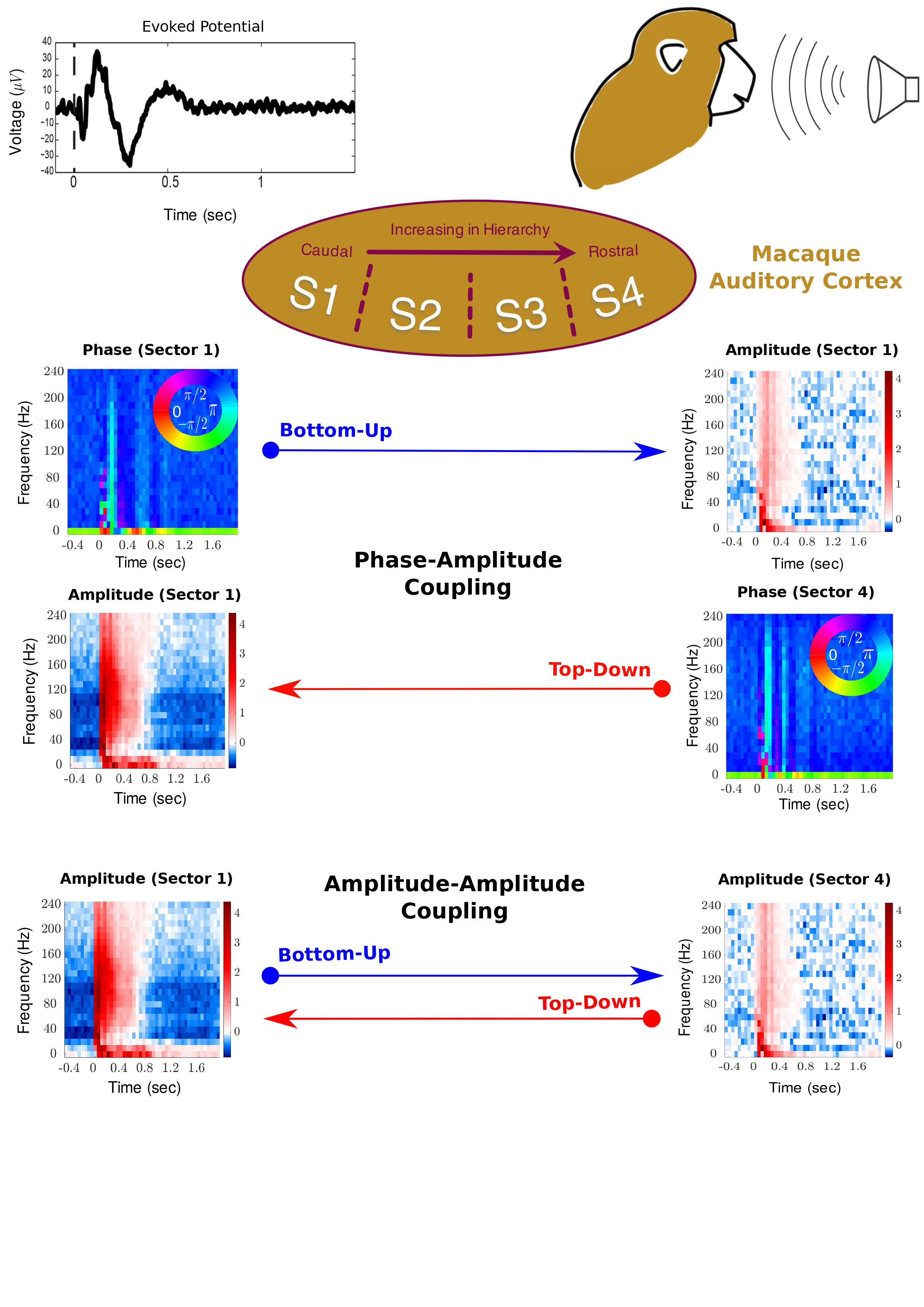

CD Márton, M Fukushima, CR Camalier, SR Schultz, BB Averbeck eNeuro , 2019 / bioRxiv The brain consists of highly interconnected cortical areas, yet the patterns in directional cortical communication are not fully understood, in particular with regards to interactions between different signal components across frequencies. We developed a a unified, computationally advantageous Granger-causal framework and used it to examine bi-directional cross-frequency interactions across four sectors of the auditory cortical hierarchy in macaques. Our findings extend the view of cross-frequency interactions in auditory cortex, suggesting they also play a prominent role in top-down processing. |

|

|

|

Colab, 2021 Tired of grappling with art so abstract it makes the most obstinate Sotheby's appraiser cringe? Worry no more. |

|

Medium, 2020 The world keeps turning, the clock never stops, and I just want to do the most optimal thing. So the faster I figure out myself, the sooner I can get started to do what matters. We often hear sentences like “Be the best you can be”, “Know thyself”, “Travelling makes you grow”, “Stay on your path”, or “Be more conscious of yourself”. This article will try to attack platitudes head-on and provide some soothing answers, like a pill popped quickly, but less addictive and hopefully more everlasting. |

|

Medium, 2018 Can we discern fundamental computational principles by which neural networks operate in the brain? By connecting individual brushstrokes into meaningful wholes, this article will strive to generate insight into how things might fit together. |

|

For mind-bending language games,

|

|

|